Reliability in research refers to the consistency of a measure. A test is considered reliable if it produces the same results under consistent conditions. There are several types of reliability, each relevant in different contexts and research designs. In this comprehensive guide, we will explore the various forms of reliability and how to assess them using SPSS Statistics.

Types of Reliability

Internal Consistency

Internal consistency measures whether several items that propose to measure the same general construct produce similar scores. One common method to assess internal consistency is Cronbach’s Alpha. To perform this in SPSS, follow these steps:

- Go to Analyze > Scale > Reliability Analysis.

- Select the items you want to test and move them to the Items box.

- Click Statistics and ensure Scale if item deleted and Correlations are checked.

- Click Continue and then OK.

The output will show Cronbach’s Alpha. An alpha value above 0.7 generally indicates acceptable internal consistency.

Test-Retest Reliability

Test-retest reliability assesses the consistency of a measure from one time to another. This can be tested by calculating the correlation between the same tests conducted at two different points in time. In SPSS, use the following steps:

- Enter the data for the two time points in separate columns.

- Go to Analyze > Correlate > Bivariate.

- Select the two variables and move them to the Variables box.

- Click OK.

The output will provide the Pearson correlation coefficient, which indicates the test-retest reliability.

Inter-Rater Reliability

Inter-rater reliability measures the extent to which different raters/observers give consistent estimates of the same phenomenon. One method to assess inter-rater reliability is Cohen’s Kappa. Here’s how you can calculate it in SPSS:

- Enter the ratings from different raters in separate columns.

- Go to Analyze > Descriptive Statistics > Crosstabs.

- Move the two rating variables to the Rows and Columns boxes.

- Click Statistics and check Kappa.

- Click Continue and then OK.

The output will include Cohen’s Kappa value, which ranges from -1 to 1. Values closer to 1 indicate higher agreement between raters.

Assessing Reliability Using SPSS Statistics

Cronbach’s Alpha for Internal Consistency

To compute Cronbach’s Alpha in SPSS, follow these steps:

- Go to Analyze > Scale > Reliability Analysis.

- Select your items and move them to the Items box.

- Click Statistics and ensure Scale if item deleted and Correlations are checked.

- Click Continue and then OK.

The output will display Cronbach’s Alpha. Values above 0.7 generally indicate acceptable internal consistency.

Example Analysis in SPSS

Internal Consistency with Cronbach’s Alpha

Suppose we have a questionnaire with five items designed to measure job satisfaction. We want to test the internal consistency of this questionnaire.

Data for five respondents are as follows:

| Respondent | Item 1 | Item 2 | Item 3 | Item 4 | Item 5 |

|---|---|---|---|---|---|

| 1 | 4 | 3 | 5 | 4 | 5 |

| 2 | 5 | 4 | 4 | 5 | 4 |

| 3 | 3 | 4 | 4 | 3 | 5 |

| 4 | 4 | 4 | 5 | 5 | 5 |

| 5 | 5 | 5 | 4 | 4 | 5 |

To analyze this data in SPSS:

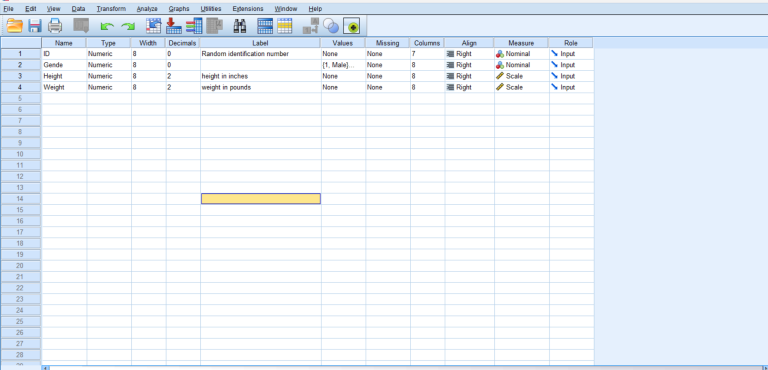

- Enter the data into SPSS.

- Go to Analyze > Scale > Reliability Analysis.

- Select the five items and move them to the Items box.

- Click Statistics and ensure Scale if item deleted and Correlations are checked.

- Click Continue and then OK.

The output will show Cronbach’s Alpha. In this example, suppose Cronbach’s Alpha is 0.85, indicating good internal consistency.

Test-Retest Reliability Example

Consider a scenario where we have administered the same test to the same group of respondents at two different points in time. The data are as follows:

| Respondent | Time 1 | Time 2 |

|---|---|---|

| 1 | 80 | 82 |

| 2 | 85 | 84 |

| 3 | 78 | 79 |

| 4 | 92 | 91 |

| 5 | 88 | 87 |

To analyze this data in SPSS:

- Enter the data into SPSS.

- Go to Analyze > Correlate > Bivariate.

- Select the Time 1 and Time 2 variables and move them to the Variables box.

- Click OK.

The output will provide the Pearson correlation coefficient. In this example, suppose the Pearson correlation is 0.95, indicating excellent test-retest reliability.

Inter-Rater Reliability Example

Suppose we have two raters who evaluate the same set of responses. The data are as follows:

| Response | Rater 1 | Rater 2 |

|---|---|---|

| 1 | 3 | 3 |

| 2 | 4 | 4 |

| 3 | 2 | 2 |

| 4 | 5 | 5 |

| 5 | 4 | 4 |

To analyze this data in SPSS:

- Enter the data into SPSS.

- Go to Analyze > Descriptive Statistics > Crosstabs.

- Move the two rating variables to the Rows and Columns boxes.

- Click Statistics and check Kappa.

- Click Continue and then OK.

The output will include Cohen’s Kappa value. In this example, suppose Cohen’s Kappa is 0.85, indicating substantial agreement between the raters.

Additional Resources

For more detailed instructions and examples on using SPSS Statistics, check out our other comprehensive guides:

- Adding Data and Understanding Data View/Variable View in SPSS Statistics

- Creating Dummy Variables in SPSS

- Different Types of Variables in SPSS Statistics

- Two-Way Repeated Measures ANOVA Using SPSS

- Wilcoxon Signed-Rank Test Using SPSS Statistics

- Poisson Regression Using SPSS Statistics

- Kruskal-Wallis H Test in SPSS

- Principal Components Analysis (PCA) in SPSS Statistics

- Kendall’s Tau-b Using SPSS Statistics

- Pearson’s Product-Moment Correlation Using SPSS

- Kaplan-Meier Survival Analysis Using SPSS Statistics

- ANCOVA Using SPSS Statistics

- Dichotomous Moderator Analysis in SPSS

- Two-Way ANOVA Using SPSS Statistics

- Partial Correlation Using SPSS Statistics

- Multiple Regression Using SPSS Statistics

- Creating a Scatterplot Using SPSS Statistics

- Linear Regression Using SPSS Statistics

- Friedman Test Using SPSS Statistics

- Chi-Square Test for Association Using SPSS Statistics

- Variables in SPSS Statistics

- Multiple Regression Using SPSS

- How to Perform a One-Sample T-Test in SPSS

- How to Perform Paired Samples T-Tests in SPSS